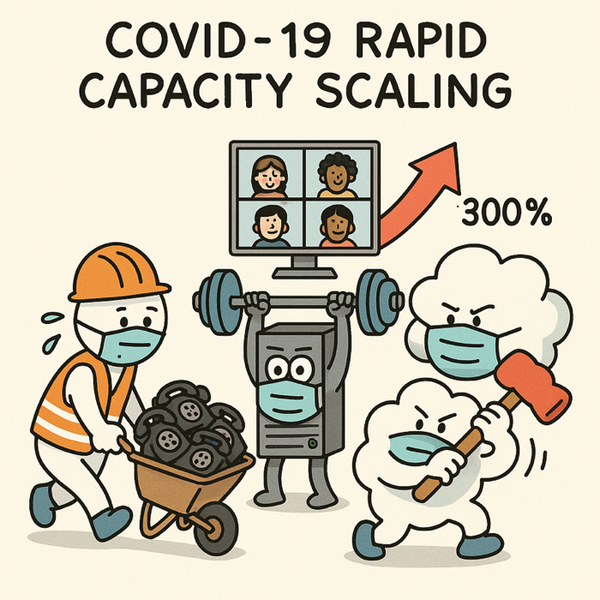

When the COVID-19 pandemic triggered an unprecedented shift to remote work, our UCaaS platforms experienced a 300% surge in usage. I led rapid capacity scaling efforts across our AWS infrastructure, deploying dynamic Auto Scaling Groups (ASGs), intelligent heartbeat-based instance monitoring, and cookie-based NGINX load balancing to ensure high availability.

We introduced a custom AWS Lambda-based termination policy to gracefully decommission idle instances without disrupting active user sessions. Automated pipelines using Jenkins and Terraform facilitated seamless AMI promotion and deployment across multiple environments, while Consul and Prometheus ensured consistent, real-time monitoring of scaling activity.

These measures enabled uninterrupted service at peak pandemic load, supported global expansion, and laid the foundation for long-term scalability and resilience.

Highlights

- Scaled conferencing infrastructure by over 300% across five AWS regions (US, EU, CA, AP) during the early pandemic response

- Achieved zero major outages by combining static schedule-based scaling and dynamic CPU-based thresholds

- Team developed a fully automated AMI promotion and deployment pipeline using Jenkins, Terraform, and DynamoDB for all environments, designed a custom heartbeat agent for instance health monitoring, maintenance management, and controlled scale-in

How It Works

- Monitoring and Scaling: AWS CloudWatch tracks CPU utilization. ASGs scale out when utilization exceeds 50% and scale in when below 30%, adjusted per region

- Custom Termination: A Lambda function identifies underutilized instances and tags them for self-termination. Instances verify they are not in maintenance or serving calls before exiting

- DNS and Routing: WebSocket-based load balancing was implemented using cookies, NGINX, and AWS ALB. Static and dynamic DNS ensured reliable user-to-server routing

- AMI Promotion: New server versions were packaged into AMIs and promoted to environments via an automated Jenkins pipeline that updated ASG launch templates through Terraform

Implementation

- A cross-region AWS Auto Scaling framework tailored for Media and Meeting workloads, based on SFU and Janus/FS architecture.

- Custom Python-based

heartbeatdaemon to manage server health and lifecycle via API. - Used Prometheus + Consul for dynamic service discovery and infrastructure-aware metrics collection.

- Cookie-based user affinity routing and fallback handling with AWS ALB and NGINX.

- Deployment safety using canary-style rollouts with separate ASGs for partial releases

Lessons Learned

- Automated lifecycle control is critical: Decentralized, instance-level management of health and termination status drastically improved reliability during scaling operations.

- Separation of concerns improves resilience: By abstracting application health, DNS management, and infrastructure logic, each layer could evolve independently.

- Infrastructure as Code enables repeatability: Terraform-based automation reduced human error and made pre-prod testing identical to production launches.

- Real user load varies: Regional scaling policies needed regular tuning; CPU-based metrics provided consistent and cost-effective triggers.