VM Provisioning

See https://docs.cloud.oracle.com/iaas/Content/FreeTier/freetier_topic-Always_Free_Resources.htm

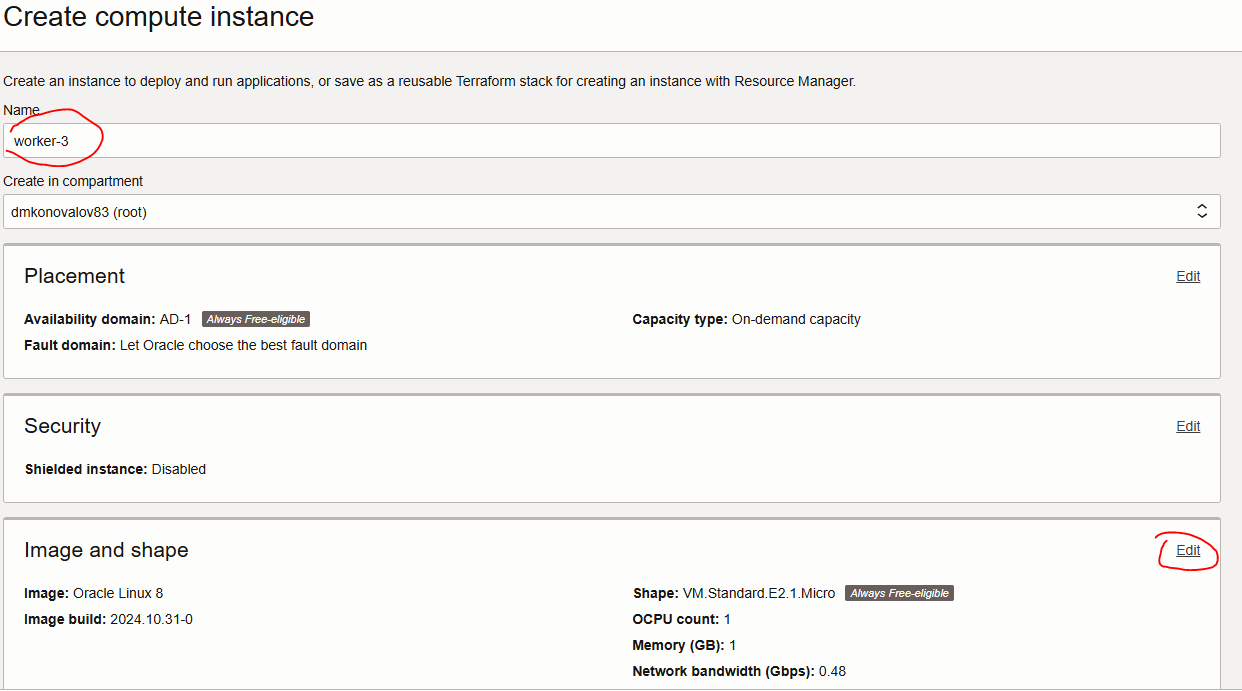

- After account is created, navigate to https://cloud.oracle.com/compute/instances and click “Create Instance”. Set desired instance name and change the instance shape (VM Type)

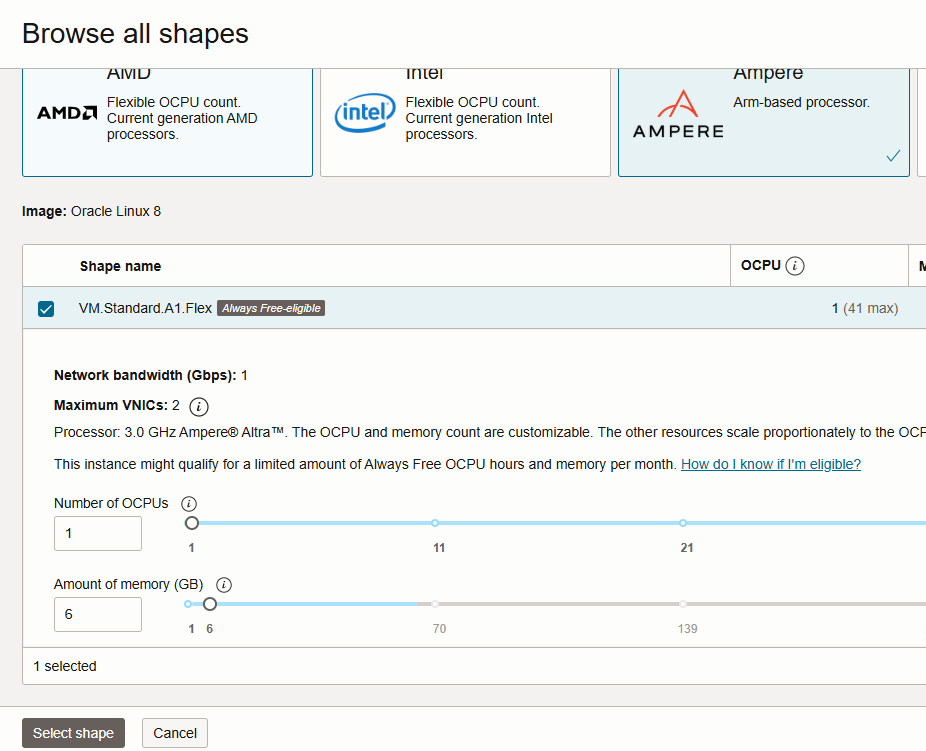

Pick Ampere VM.Standard.A1.Flex and set desired vCPU and RAM

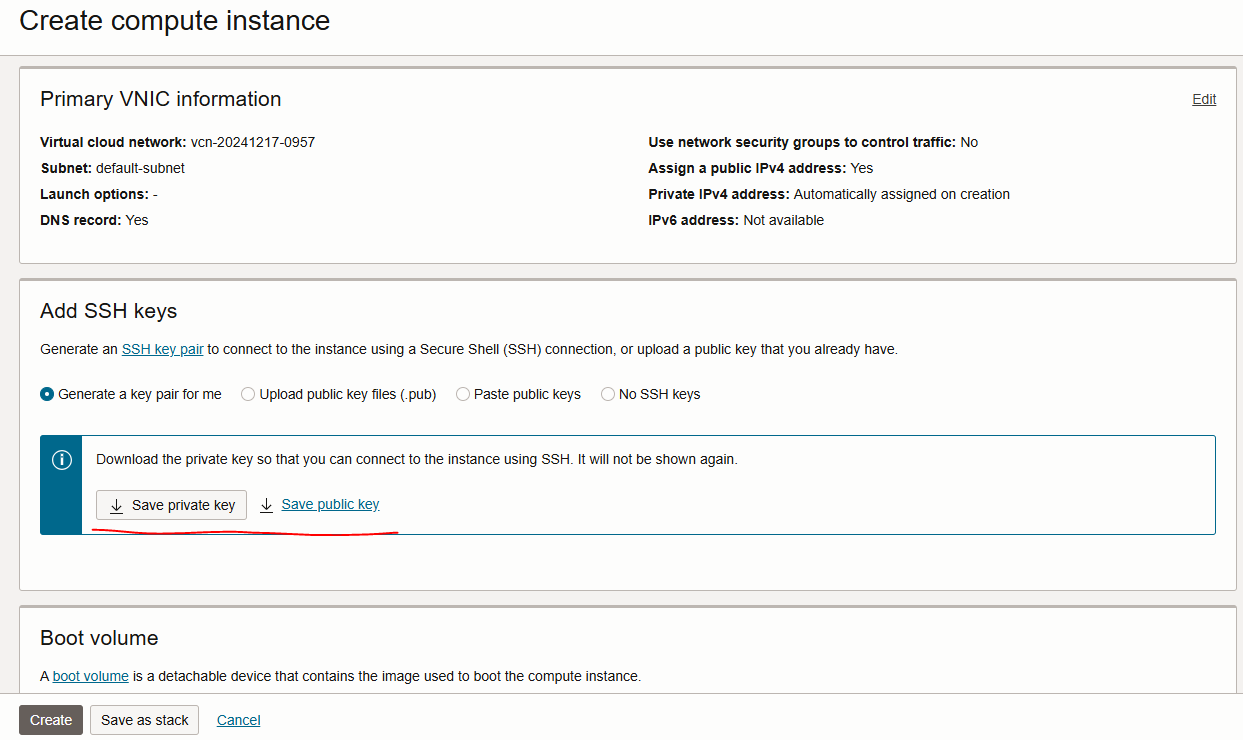

Save SSH keys

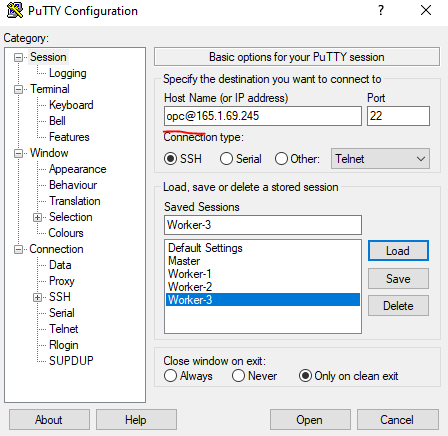

- To use saved SSK key with Putty, convert it to Putty format using Puttygen - https://www.oracle.com/webfolder/technetwork/tutorials/obe/cloud/ggcs/Change_private_key_format_for_Putty/Change_private_key_format_for_Putty.html For Oracle Linux 8 use " opc " as a user name with the converted key

Common steps

Install and Configure Kubernetes Components

Install the required tools on all nodes:

# Kubernetes

sudo tee /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.32/rpm/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.32/rpm/repodata/repomd.xml.key

EOF

sudo dnf install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

# check version

kubeadm version

kubectl version --client

# Docker

sudo dnf install -y yum-utils device-mapper-persistent-data lvm2

sudo dnf -y install dnf-plugins-core

sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo dnf config-manager --enable docker-ce-stable

sudo dnf install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo systemctl enable --now docker

sudo systemctl start docker

# check version

docker --version

# Generate Containerd default config

containerd config default | sudo tee /etc/containerd/config.toml > /dev/null

# Update sandbox_image version

sudo sed -i 's|sandbox_image = "registry.k8s.io/pause:3.6"|sandbox_image = "registry.k8s.io/pause:3.10"|' /etc/containerd/config.toml

# Update SystemdCgroup (NOT systemd_cgroup) to true

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

# Update socket path

sudo sed -i 's|address = "/run/containerd/containerd.sock"|address = "/var/run/containerd/containerd.sock"|' /etc/containerd/config.toml

# Enable containerd

sudo systemctl enable containerd

# Restart containerd

sudo systemctl restart containerd

# Disable swap

sudo swapoff -a

# Comment out the swap entry in /etc/fstab

sudo sed -i.bak '/\bswap\b/s/^/#/' /etc/fstab

# Load the required kernel module

# Add Kernel modules for K8S

echo -e "br_netfilter\noverlay\nnf_conntrack\nip_vs\nip_vs_rr\nip_vs_wrr\nip_vs_sh\nxt_conntrack\nveth\ndm_thin_pool" | sudo tee /etc/modules-load.d/k8s.conf > /dev/null

sudo systemctl daemon-reexec

# Backup the existing sysctl.conf file

sudo cp /etc/sysctl.conf /etc/sysctl.conf.backup.$(date +%F-%T)

# Add the required Network settings to sysctl.conf

echo "net.bridge.bridge-nf-call-iptables=1" | sudo tee -a /etc/sysctl.conf > /dev/null

echo "net.bridge.bridge-nf-call-ip6tables=1" | sudo tee -a /etc/sysctl.conf > /dev/null

echo "net.ipv4.ip_forward=1" | sudo tee -a /etc/sysctl.conf > /dev/null

# Apply the changes

sudo sysctl -p

# Update OS

sudo dnf -y remove tuned-profiles-oci

sudo dnf -y update tuned

sudo dnf -y update

# add Firewall rules for Funnel

sudo firewall-cmd --permanent --add-port=8285/udp

sudo firewall-cmd --permanent --add-port=8472/udp

sudo firewall-cmd --permanent --add-port=6783-6784/tcp

sudo firewall-cmd --permanent --add-port=6783-6784/udp

sudo firewall-cmd --permanent --add-port=53/tcp

sudo firewall-cmd --permanent --add-port=53/udp

sudo firewall-cmd --permanent --add-port=80/tcp

sudo firewall-cmd --permanent --add-port=443/tcp

sudo firewall-cmd --permanent --add-source=10.0.0.0/16

sudo firewall-cmd --reload

# Placeholder for further thinking

sudo systemctl stop firewalld

sudo systemctl disable firewalld

# Check if reboot is required after updates and restart

sudo dnf needs-restarting -r | grep -q "Reboot is required" && { echo "Reboot is required. Restarting now..."; sudo reboot; } || echo "No reboot is required."

Role Specific steps

Initialize the Kubernetes Control Plane (Master Node)

Run the following command only on the master node:

Check the cluster initialization in dry-run mode:

# install telnet, tmux (or screen)

sudo dnf install -y tmux telnet

# update firewall rules

sudo firewall-cmd --permanent --add-port=6443/tcp # Kubernetes API server

sudo firewall-cmd --permanent --add-port=10250/tcp # Kubelet API

sudo firewall-cmd --permanent --add-port=2379-2380/tcp # etcd (only for master nodes)

sudo firewall-cmd --permanent --add-port=10251/tcp # kube-scheduler

sudo firewall-cmd --permanent --add-port=10252/tcp # kube-controller-manager

sudo firewall-cmd --permanent --add-source=10.244.0.0/16

sudo firewall-cmd --reload

# Pull images

sudo kubeadm config images pull

# Check the cluster initialization in dry-run mode

sudo kubeadm init --pod-network-cidr=10.244.0.0/16 --dry-run 2>&1 | grep -iE "error|warning"

Initialize the cluster:

sudo kubeadm init --pod-network-cidr=10.244.0.0/16

- Save the join command (it will look like kubeadm join … ) to add worker nodes later.

# set up kubectl for the Master node

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# Test the connection

kubectl get nodes

# Download the official Flannel manifest

curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# Replace the default namespace

sed -i 's/namespace: kube-flannel/namespace: kube-system/' kube-flannel.yml

# Apply the modified manifest to the Kubernetes cluster

kubectl apply -f kube-flannel.yml

# Verify the configuration has been applied correctly

kubectl get configmap kube-flannel-cfg -n kube-system -o yaml

Join the Kubernetes Cluster (Worker Node)

# update firewall rules

sudo firewall-cmd --permanent --add-port=10250/tcp # Kubelet API

sudo firewall-cmd --permanent --add-port=30000-32767/tcp # NodePort services

sudo firewall-cmd --permanent --add-source=10.244.0.0/16

sudo firewall-cmd --reload

On each worker node, run the kubeadm join command provided earlier during the master initialization step:

sudo kubeadm join <MASTER_IP>:6443 --token <TOKEN> --discovery-token-ca-cert-hash <HASH>

Verify worker nodes have joined successfully:

kubectl get nodes